Justine Cassell is a flying car

Flight: A series of conversations Flying Car is having with individuals that are contributing, surprising, and delighting the world with something new.

Justine Cassell is bringing relationship potential to robots. More accurately, she’s helping lead design of Artificial Intelligence that can recognize and respond to what are known as “social states.” What are “social states?” They are psychological states (i.e., trust, rapport, intimacy, etc.) expressed in conversation and ways of behaving. From vulnerability to interest, these visual and tonal expressions are critical for building trust and, in Cassell’s words, engendering “social reciprocity.”

A life-long educator, Cassell is particularly interested in the possibilities for AI to contribute to everyday learning. If not actually leading classrooms, she sees their potential for serving as personal tutors. A primary hurdle to that vision is AI that can “read” basic human social cues. Without it, there is no chance for the interpersonal bond Cassell defines as essential to effective teaching. She’s leading efforts to solve that critical piece of AI architecture.

In the last two decades, Justine Cassell’s AI research has consistently garnered serious investment as well as attention from the “who’s who” of major global corporations. Creating new ways to identify and create paths to improved computer-human interaction, Cassell has led efforts at MIT Media Lab, Northwestern University, and more recently, Carnegie Mellon’s School of Computer Science. All this time she’s been innovating how humans interact with computers.

In the last two decades, Justine Cassell’s AI research has consistently garnered serious investment as well as attention from the “who’s who” of major global corporations. Creating new ways to identify and create paths to improved computer-human interaction, Cassell has led efforts at MIT Media Lab, Northwestern University, and more recently, Carnegie Mellon’s School of Computer Science. All this time she’s been innovating how humans interact with computers.

After completing a Ph.D. in psychology and linguistics, Cassell came to computers inadvertently, looking for ways to model and break down the nature of human conversation. Her instinct for cross-disciplinary studies and passion for research totally fit within the emergent geography that AI inhabits. Since then she’s immersed herself in its evolution. Among her concerns is (in her words) “counteracting a negative opinion that a lot of the world has of AI as killer robots… wanting to demonstrate in other ways how it can be used for good.” We talked to Cassell recently about her current work.

Q&A

What’s getting you into the lab enthusiastically these days?

Addressing some really tough problems that I’ve been trying to address much of my career. Recently we built the very first socially aware computational architecture that can take into account the dynamic rapport in relationships between people and computational agents. And particularly their social dyadic state. In other words, their interpersonal closeness with the computer, as if the computer were another person. This has been the holy grail for me for a very long time. I’ve done small aspects of it before but never this major. That’s super exciting.

And I like the uses to which we’re able to put this social architecture. So at the same time that I’m building a socially aware personal assistant – which is a good demo but not necessarily good for the world – I’m also building a socially aware peer tutor. We know that’s for the good of the world because we know that students who come from under-resourced schools are most likely to need a social infrastructure to learn. So we’re building a kind of educational technology that can really do some good in the communities where it’s needed.

Can you elaborate what you mean by “social infrastructure?”

When people work with other people – when we collaborate – in looking at that process, we assume that work is work and social is social. In other words, we assume that when we work together, we talk about work while when we’re being social we talk about things like the recent ball game or what we’ve been doing recently. But that’s not actually true. In all interactions, there’s a social part and there’s a task part. There’s always a social part.

In other words, when you start a conversation with somebody, virtually always you start by checking in – by engaging in a little small talk. For the longest time, people who built technology for collaboration have called that part of the conversation “off-task talk.” Or the “the part of the interaction that doesn’t have to be modeled.” We found that’s not true. Indeed, that does have to be modeled. It’s an essential part of work. That is the social infrastructure of work. Social infrastructure is the thing that allows us to get on the same page with the person we’re working with.

So connect to what you’re developing around a “socially aware peer tutor.” How does the social infrastructure fit in there?

I’ll give you a great example. When I arrived at Carnegie Mellon, a graduate student here had done a great doctoral dissertation on how to build chat technology to help peers tutor one another in math. She had teens tutor each other over a chat interface. There was a whole part of that chat that she hadn’t looked at because it was “off task.” But when we looked at it closely, we discovered that while it wasn’t about math, per se, it actually played a role in predicting who learned and who didn’t learn. The data thrown out that was initially thought irrelevant actually turned out to be really important. What we discovered was that friends who insult each other, they learn better. Well, if you ever spent time with a teen, you know insulting one another is an essential part of their social life. That’s social infrastructure. We’re doing work that establishes that, and that establishes how to use that fact to build better tutoring software.

Establishing that we should insult each other?

(laughing) Exactly.

Joking aside, where do you see the most immediate applications in peer to peer tutoring?

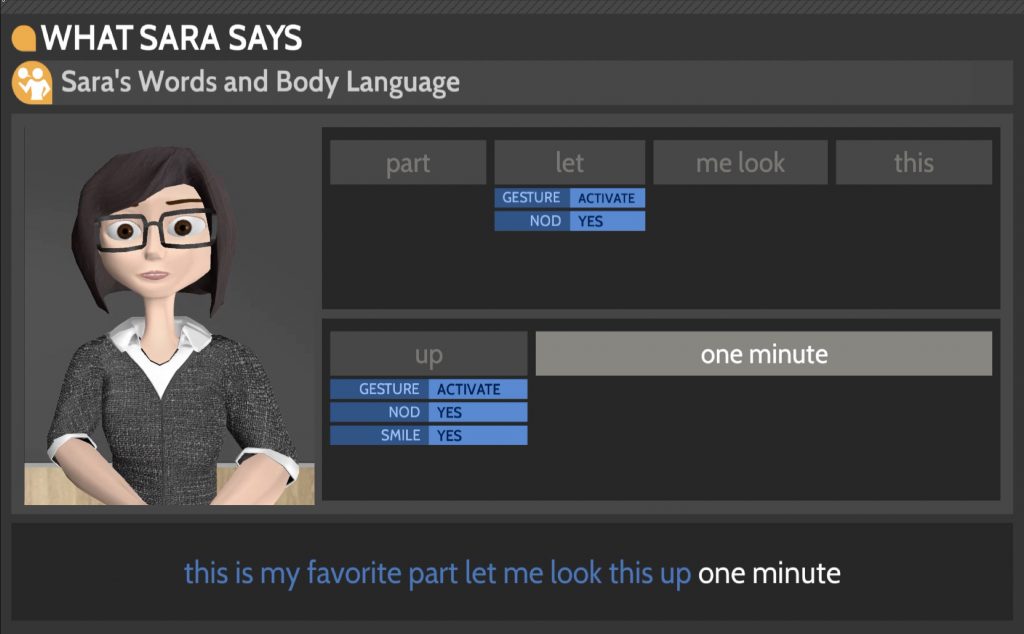

We’re now building a peer tutor that tracks in real time the level of rapport or interpersonal closeness between the educational technology and the real child. It uses that level of closeness to improve its ability to teach.

Let me give a little bit more explanation. When we learn something from somebody else, we’re opening ourselves up to ridicule in some way. The act of learning with somebody else is intrinsically face threatening. Meaning it’s intrinsically opening ourselves up to being vulnerable. but we haven’t built technologies until now that take that into account. That build interpersonal closeness in ways to make it easier for the kid being tutored to become vulnerable. We’re realizing that’s really important. And that’s what opens up the field of learning.

It’s a kind of adaptive learning. Adaptive personalized learning is a big thing right now but no one has done it this way before. No one has looked at the social infrastructure. The nature of the social interaction between the learner and the teacher. And the teacher in this case is a computer. But that doesn’t mean that the teacher – the computer – should be any less capable of building a social interaction.

Can you connect the insults to that vulnerability? How do insults make people less vulnerable while learning?

It’s usually the people being tutored who are insulting the tutors. We believe what they might be doing is trying to redress the imbalance of power. So that even though there’s teaching going on, they still have some power. And so that insulting is a way of leveling the playing field.

How would it be used computationally – how would you take that and apply it?

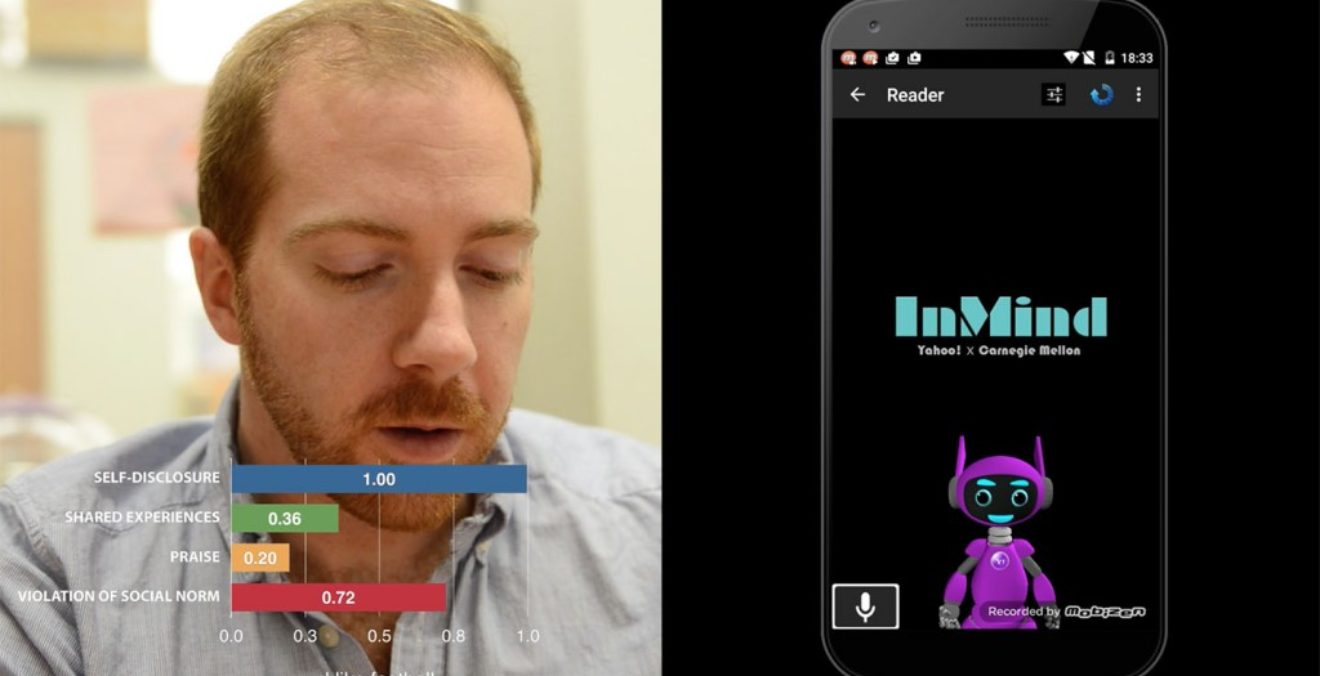

It’s interesting. Let me explain. We built three computational modules that nobody has ever built before.

The first is a real time rapport estimator. It takes input from the visual system – from a person’s non-verbal behaviors. It also takes input from the vocal system – acoustics – that is the sound quality of your voice. And it takes input from the verbal or linguistic system – what you say. It takes all that input every 30 seconds of the interaction to determine what your level of rapport is with the virtual person displayed on the screen.

Then, all that information is joined with another module that’s called the “conversational strategy classifier,” which automatically – in real time – determines what kinds of linguistic strategies the person is using to fulfill his/her goals.

For example, suppose there’s a person being tutoring. At some point she could say “I don’t understand this – what should I do next?” Or he could say, “I really suck at linear equations. I’m just not able to do this at all.” The second response is called “negative self-disclosure.” In human-to-human interactions, it leads to personal closeness or rapport. Well, those are two very different kinds of conversational strategies a person could take. That’s the second module.

The third module we built is something we call “a social reasoner.” The way to think about that is that every computational AI system has a reasoning module. A module that tries to understand the intentions of the person, then tries to respond reasonably to those intentions. For example, if you call United and get a computer system, and it asks you what you want – then you tell it that you want to buy a ticket to Galveston, Texas, the system’s reasoning module takes the understanding of what you said and it reasons about what its next move should be. Which is to propose some possible routings to Galveston and some possible times and tell you the costs of each ticket. That’s a regular reasoning module.

But the reasoning module we built also reasons about social state. The social reasoner reasons about the fact that you and the computer you are talking to might be at a low rapport level – your relationship may not be smooth or harmonious. You may not feel as if the computer understands you nor is effectively taking care of your needs, for example. So if the computer’s goal is to teach you something that is going to be difficult (and therefore potentially embarrassing) for you, it will realize that it should build rapport before attempting that task, and it will choose language with which to teach you that takes into account your potential vulnerability. That’s social reasoning.

One of the things on your site was focused on targeting African American youngsters learning science and math. Can you brief us on what you’re doing there?

I’m really interested in the socio-cultural aspects of human-computer interaction. Exploring ways to use what we know about culture and the social world to improve computers’ ability to help people and support them in their goals. As an example of that, the project you refer to concerns how to improve educational technology for children who may speak a local dialect at home that’s different from the formal dialect used in their classroom.

This project was motivated by teachers coming to us saying that they need children to learn standard, more formal American English. That’s the dialect spoken by those in power in America today. And in order to reap the benefits of our mainstream education, young people have to learn how to speak that mainstream school American English.

The teachers told us that they were not successful at teaching the mainstream or standard dialect – and we saw that ourselves in observing their classrooms. In fact, there was no place in the curriculum where teachers explicitly taught the standard dialect, nor even discussed differences between dialects. The idea of the teachers we worked with was that we should build a virtual tutor who would look like a youngster – look like the children in the classrooms – but who would speak only the standard school American dialect. They thought that maybe children would learn that standard classroom dialect better from virtual peers than from teachers. And they thought that children would learn science better from virtual peers if the digital peer spoke the more formal American dialect.

We know that kids are going to do better in class (and get better grades) if they speak the standard school dialect when they speak to the teacher. Because that’s what a teacher expects from them. But we weren’t sure whether a digital tutor who spoke only that dialect would be better than a digital tutor who spoke the dialect used by the children outside the classroom. It’s an empirical question.

On the basis of my background in linguistics, I, on the other hand, wondered whether it might be more helpful for these children to work with a computer that speaks two dialects – speaking the local dialect for the brainstorming part of the interaction with the computer – and then switching to the school dialect for a presentation to the teacher.

So we built two versions of a virtual child. Both versions looked African-American. One version spoke the school American English dialect in brainstorming with the children about a science task, and then spoke the same school dialect when practicing a presentation to the teacher. The second digital peer tutor spoke two different dialects for the two different contexts. In brainstorming with the kids, they spoke a local African American dialect spoken in Pittsburgh. The digital tutor then switched to the school dialect when they were practicing a presentation for the teacher.

Again, we had two versions. One that speaks the school American English all the way through. And the other that brainstorms in the local vernacular dialect and then switches to the school dialect for practicing presentation to the teacher. What we found was that the version that speaks the vernacular during brainstorming and then switches to the school dialect during the practice of presentation to the teacher – those children working with that digital tutor did better in science. Which is a really radical result.

Enabling AI to engage with children has a powerful impact

Okay, let’s say that’s great for a percentage of African-American kids speaking a local vernacular. But what’s that mean for the rest of the world?

It means a lot. Because there are dialects in every country. And everywhere there’s a dialect, there’s a power relationship between different dialects. Everywhere there’s a dialect there’s a dialect that has more power than another dialect. So having a technology that’s capable of speaking in dialect when a person is brainstorming – say alone with other kids – and then switching to the standard – thereby modeling the fact that when a grownup comes in you should switch to the standard – that’s a good thing. It allows the child to concentrate on science during the brainstorming rather than having to think about language and science at the same time, and it allows the child to work with somebody who speaks like he or she does, but models switching for a reason – because the teacher is there.

Also, it’s relevant in any case where kids come to school speaking one language and the teacher wants them to speak another language, we don’t think it will be any different than speaking in dialect. So having a digital peer who speaks Italian, and then can switch to English during the presentation to the teacher, may help bi-lingual children learn a second language. That’s what paradoxical and powerful. It’s by speaking two languages that the children are learning the standard better.

Is it applicable to adults?

Anyplace where our socio-cultural roots are important aspects of our identity are places where you can use something like this.

We seem to assume that technology that we’ve built is culture free. That’s not the case. Every designer of a piece of AI technology is making a choice about how it speaks (and what it looks like if there’s an image of it). Those decisions are not without ramifications.

What we’re finding more broadly is that making a technology that looks like the children it’s working with and also sounds like them has a positive impact on their ability to do real work. That’s something we needed to know. It’s the same for adults. We have to take those things into account.

What most excites you about that from a larger, real world perspective?

I think there’s such an opportunity to take the issue of personalization more seriously. We talk about personalizing. Siri or Alexa. All of these systems are supposed to personalize computational systems. In fact, they’re really just search with a voice. That’s not personalization. It’s not as good as we can get. One of the real business opportunities is to personalize AI through using many more things we know about people. In other words, we can improve so much human-to-computer interaction by using much of the data that we have on human social interaction.

Final question, looking at the world today, what most excites you?

This is going to sound like a funny thing to be excited about. But it’s that there is a tremendous amount of negative sentiment today. The elections are a good example of that. And I think that we have an opportunity to push back at that. We have an opportunity to show what it means to be a good person through our actions as technologists and as academics. I think it’s really important to model that for the world.